|

Research Interest

My research interest focuses on Material

Appearance Representation from the acquisition to

the rendering. I am working with Opticians and

Physicists to improve the quality and speed of

(SV)-BRDF and roughness acquisition. This research

also induces work on new mathematical

representation and parametrization of the

(SV)-BRDF space.

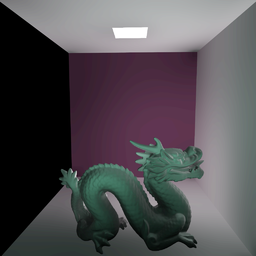

During my Ph.D. I have also worked on Global

Illumination for offline and real-time rendering

or sketched-based UI to design highlights. I am

very interested in emerging technologies such as

Optix which opens new horizon to develop hybrid

rendering systems.

Publications

|

|

A Composite BRDF Model for Hazy Gloss

|

|

P. Barla, R. Pacanowski, P. Vangorp

|

|

Computer Graphics Forum (EGSR 2018).

|

|

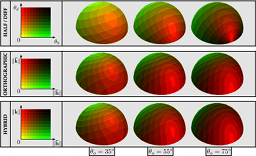

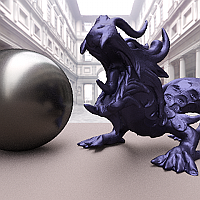

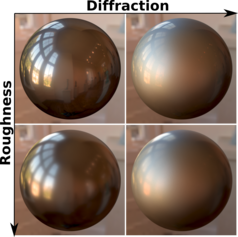

We introduce a bidirectional reflectance distribution function (BRDF) model for the rendering of materials that exhibit hazy

reflections, whereby the specular reflections appear to be flanked by a surrounding halo. The focus of this work is on artistic

control and ease of implementation for real-time and off-line rendering. We propose relying on a composite material based

on a pair of arbitrary BRDF models; however, instead of controlling their physical parameters, we expose perceptual parameters

inspired by visual experiments [VBF17]. Our main contribution then consists in a mapping from perceptual to physical parameters

that ensures the resulting composite BRDF is valid in terms of reciprocity, positivity and energy conservation. The immediate

benefit of our approach is to provide direct artistic control over both the intensity and extent of the haze effect, which

is not only necessary for editing purposes, but also essential to vary haziness spatially over an object surface. Our solution

is also simple to implement as it requires no new importance sampling strategy and relies on existing BRDF models. Such a

simplicity is key to approximating the method for the editing of hazy gloss in real-time and for compositing.

|

|

Paper (Author's version uploaded on HAL)

|

Supplemental Material

|

|

|

|

|

|

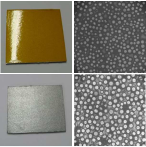

Diffraction Removal in an Image-based BRDF Measurement Setup

|

|

Antoine Lucat, Ramon Hegedus and Romain Pacanowski

|

|

EI 2018 - Electronic Imaging Material Appearance 2018. Jan 2018, Burlingame, California.

|

|

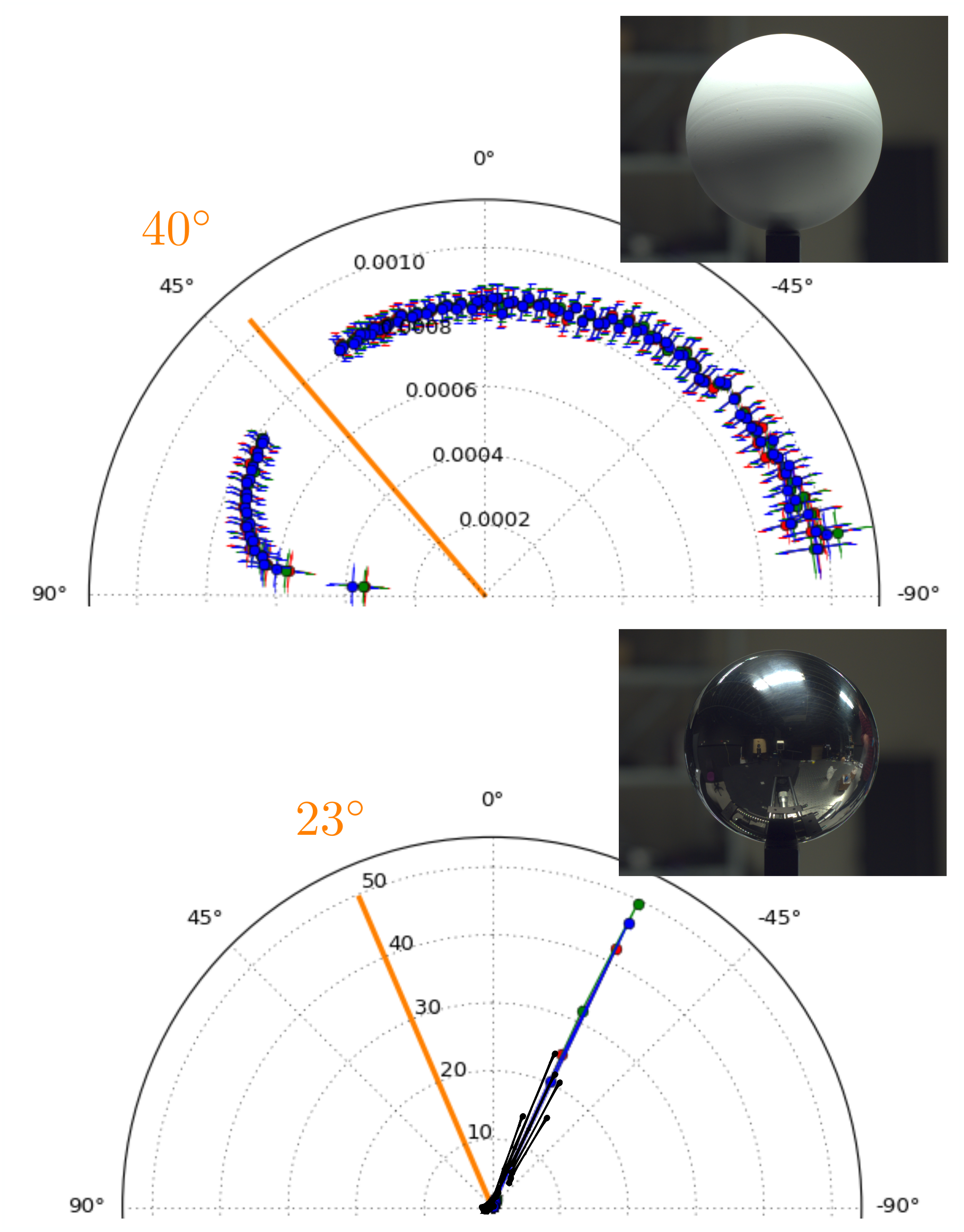

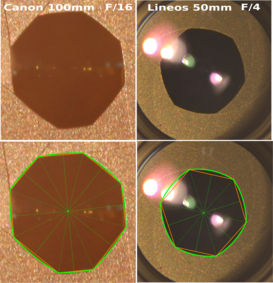

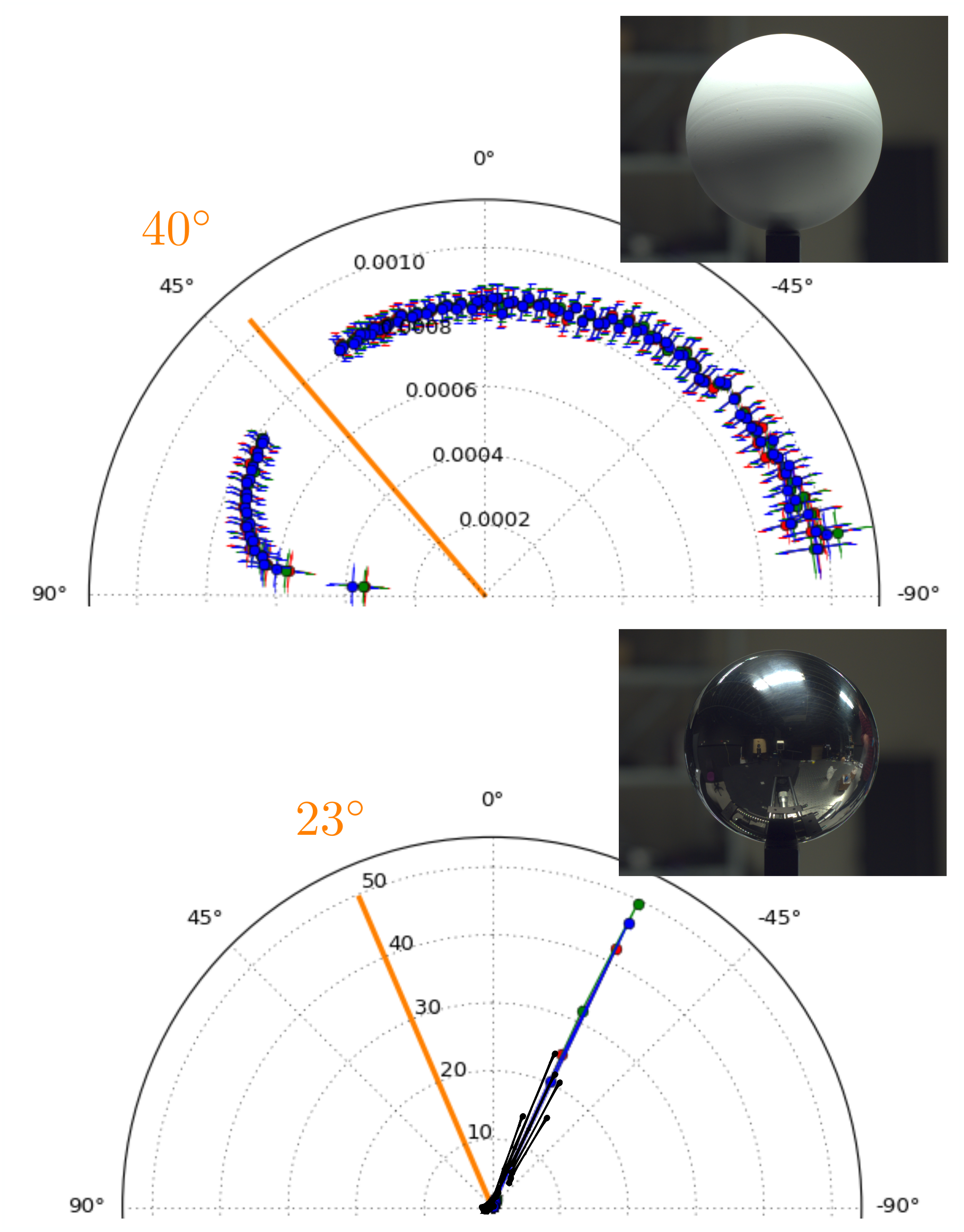

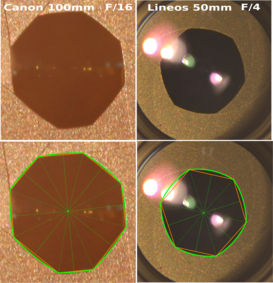

Material appearance is traditionally represented through its Bidirectional Reflectance Distribution Function (BRDF),

quantifying how incident light is scattered from a surface over the hemisphere. To speed up the measurement process of the

BRDF for a given material, which can necessitate millions of measurement directions, image-based setups are often used for

their ability to parallelize the acquisition process: each pixel of the camera gives one unique configuration of measurement.

With highly specular materials, the High Dynamic Range (HDR) imaging techniques are used to acquire the whole BRDF dynamic

range, which can reach more than 10 orders of magnitude. Unfortunately, HDR can introduce star-burst patterns around highlights

arising from the diffraction by the camera aperture. Therefore, while trying to keep track on uncertainties throughout the

measurement process, one has to be careful to include this underlying diffraction convolution kernel. A purposely developed

algorithm is used to remove most part of the pixels polluted by diffraction, which increase the measurement quality of specular

materials, at the cost of discarding an important amount of BRDF configurations (up to 90\% with specular materials). Finally,

our setup succeed to reach a 1.5 degree median accuracy (considering all the possible geometrical configurations), with

a repeatability from 1.6% for the most diffuse materials to 5.5% for the most specular ones. Our new database, with their

quantified uncertainties, will be helpful for comparing the quality and accuracy of the different experimental setups and

for designing new image-based BRDF measurement devices

|

|

Paper (Author's version uploaded on HAL)

|

|

|

|

|

Diffraction effects detection for HDR image-based measurements

|

|

Antoine Lucat, Ramon Hegedus and Romain Pacanowski

|

|

Optics Express, Optical Society of America, 2017.

|

|

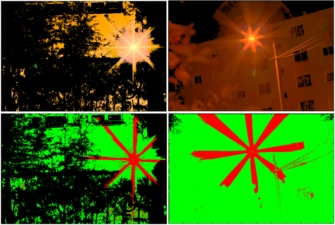

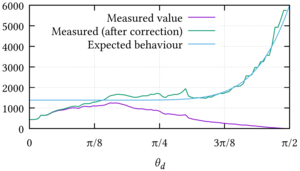

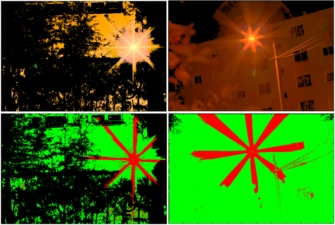

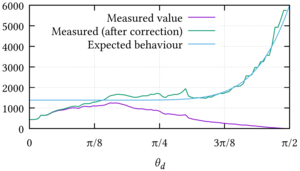

Modern imaging techniques have proved to be very efficient to recover a scene with high dynamic range (HDR) values. However,

this high dynamic range can introduce star-burst patterns around highlights arising from the diffraction of the camera aperture.

The spatial extent of this effect can be very wide and alters pixels values, which, in a measurement context, are not reliable

anymore. To address this problem, we introduce a novel algorithm that, utilizing a closed-form PSF, predicts where the diffraction

will affect the pixels of an HDR image, making it possible to discard them from the measurement. Our approach gives better

results than common deconvolution techniques and the uncertainty values (convolution kernel and noise) of the algorithm output

are recovered.

|

|

Paper (Author's version uploaded on HAL)

|

|

|

|

|

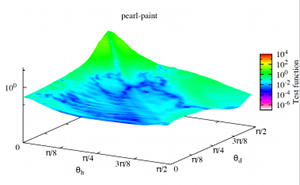

A Two-Scale Microfacet Reflectance Model Combining Reflection and Diffraction

|

|

Nicolas Holzschuch and Romain Pacanowski

|

|

ACM Transactions on Graphics, Association for Computing Machinery. Proceedings of Siggraph. 2017

|

|

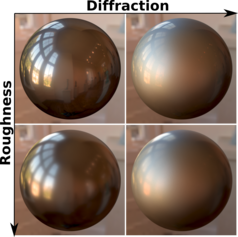

Adequate reflectance models are essential for the production of photorealistic images. Microfacet reflectance models predict

the appearance of a material at the macroscopic level based on microscopic surface details. They provide a good match with

measured reflectance in some cases, but not always. This discrepancy between the behavior predicted by microfacet models

and the observed behavior has puzzled researchers for a long time. In this paper, we show that diffraction effects in the

micro-geometry provide a plausible explanation. We describe a two-scale reflectance model, separating between geometry details

much larger than wavelength and those of size comparable to wavelength. The former model results in the standard Cook-Torrance

model. The latter model is responsible for diffraction effects. Diffraction effects at the smaller scale are convolved by

the micro-geometry normal distribution. The resulting two-scale model provides a very good approximation to measured reflectances.

|

|

Paper (Author's version uploaded on HAL)

|

Supplemental Material

|

|

Slides

(with comments here)

from the Siggraph Presentation.

|

Slides

from an early presentation at the French BRDF Workshop "Tout sur les BRDFs"

|

|

Source Code Implementation of the Model.

|

Additional Insight on the implementation of the He et al. BRDF Model

|

|

INRIA

Technical Report presenting the first version of the Model

|

INRIA

Technical Report presenting an improved version of the model (before the one published at Siggraph)

|

|

|

|

The Effects of Digital Cameras Optics and Electronics for Material Acquisition

|

|

Nicolas Holzschuch and Romain Pacanowski

|

|

EUROGRAPHICS WORKSHOP ON MATERIAL APPEARANCE MODELING, Jun 2017, Helsinki, Finland. 2017 Conference papers

|

|

For material acquisition, we use digital cameras and process the pictures. We usually treat the cameras as perfect pinhole

cameras, with each pixel providing a point sample of the incoming signal. In this paper, we study the impact of camera optical

and electronic systems. Optical system effects are modelled by the Modulation Transfer Function (MTF). Electronic System

effects are modelled by the Pixel Response Function (PRF). The former is convolved with the incoming signal, the latter is

multiplied with it. We provide a model for both effects, and study their impact on the measured signal. For high frequency

incoming signals, the convolution results in a significant decrease in measured intensity, especially at grazing angles.

We show this model explains the strange behaviour observed in the MERL BRDF database at grazing angles

|

|

Paper (Author's version uploaded on HAL)

|

|

|

|

|

|

|

Diffraction Prediction in HDR measurements

|

|

Ramon Hegedus, Antoine Lucat, Romain Pacanowski

|

|

EUROGRAPHICS WORKSHOP ON MATERIAL APPEARANCE MODELING, Jun 2017, Helsinki, Finland. 2017 Conference papers

|

|

Modern imaging techniques have proved to be very efficient to recover a scene with high dynamic range values. However, this

high dynamic range can introduce star-burst patterns around highlights arising from the diffraction of the camera aperture.

The spatial extent of this effect can be very wide and alters pixels values, which, in a measurement context, are not reliable

anymore. To address this problem, we introduce a novel algorithm that predicts, from a closed-form PSF, where the diffraction

will affect the pixels of an HDR image, making it possible to discard them from the measurement. Our results give better

results than common deconvolution techniques and the uncertainty values (convolution kernel and noise) of the algorithm output

are recovered.

|

|

Paper (Author's version uploaded on HAL)

|

|

|

|

|

|

|

Isotropic BRDF Measurements with Quantified Uncertainties

|

|

Ramon Hegedus, Antoine Lucat, Justine Redon, Romain Pacanowski

|

|

EUROGRAPHICS WORKSHOP ON MATERIAL APPEARANCE MODELING, Jun 2016, Dublin, Ireland. 2016.

|

|

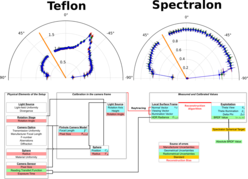

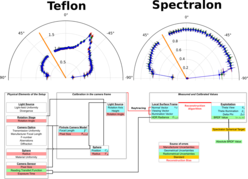

Image-based BRDF measurements on spherical material samples present a great opportunity to shorten significantly the acquisition

time with respect to more traditional, non-multiplexed measurement methods for isotropic BRDFs. However, it has never been

analyzed deeply, what measurement accuracy can be achieved in such a setup; what are the main contributing uncertainty factors

and how do they relate to calibration procedures. In this paper we present a new set of isotropic BRDF measurements with

their radiometric and geometric uncertainties acquired within such an imaging setup. We discuss the most prominent optical

phenomena that affect measurement accuracy and pave the way for more thorough uncertainty analysis in forthcoming image-based

BRDF measurements. Our newly acquired data with their quantified uncertainties will be helpful for comparing the quality

and accuracy of the different experimental setups and for designing other such image-based BRDF measurement devices.

|

|

Paper (Author's version uploaded on HAL)

|

|

|

|

|

|

|

Cache-friendly micro-jittered sampling

|

|

A. Dufay, P. Lecocq, Romain Pacanowski, J.E. Marvie, X. Granier

|

|

SIGGRAPH 2016, Jul 2016. Conference papers (TALK).

|

|

Monte-Carlo integration techniques for global illumination are popular on GPUs thanks to their massive parallel architecture,

but efficient implementation remains challenging. The use of randomly de-correlated low-discrepancy sequences in the path-tracing

algorithm allows faster visual convergence. However, the parallel tracing of incoherent rays often results in poor memory

cache utilization, reducing the ray bandwidth efficiency. Interleaved sampling [Keller et al. 2001] partially solves this

problem, by using a small set of distributions split in coherent ray-tracing passes, but the solution is prone to structured

noise. On the other hand, ray-reordering methods [Pharr et al. 1997] group stochastic rays into coherent ray packets but

their implementation add an additional sorting cost on the GPU [Moon et al. 2010] [Garanzha and Loop 2010]. We introduce

a micro-jittering technique for faster multi-dimensional Monte-Carlo integration in ray-based rendering engines. Our method,

improves ray coherency between GPU threads using a slightly altered low-discrepancy sequence rather than using ray-reordering

methods. Compatible with any low-discrepancy sequence and independent of the importance sampling strategy, our method achieves

comparable visual quality with classic de-correlation methods, like Cranley-Patterson rotation [Kollig and Keller 2002],

while reducing rendering times in all scenarios

|

|

Paper (Author's version uploaded on HAL)

|

See also Chapter from Arthur's Dufay

PhD Thesis Manuscript

|

|

A Video explaining the technique and showing additional results

|

|

|

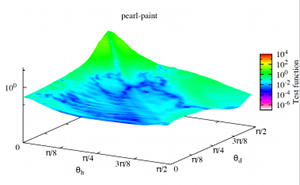

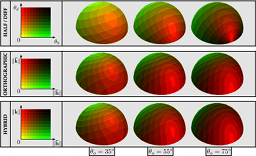

| In praise for an alternative BRDF parametrization

|

|

P. Barla, L. Belcour, and R. Pacanowski

|

|

Eurographics Workshop on Material Appearance Modeling. June 2015.

|

|

In this paper, we extend the work of Neumann et al. [NNSK99] and Stark et al. [SAS05] to a pair of 4D BRDF parameterizations with explicit changes of variables. We detail their mathematical properties and relationships to the commonly-used halfway/difference parametrization, and discuss their benefits and drawbacks using a few an- alytical test functions and measured BRDFs. Our preliminary study suggests that the alternative parametrization inspired by Stark et al. [SAS05] is superior, and should thus be considered in future work involving BRDFs.

|

|

Paper (Author's version)

|

|

|

|

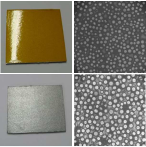

Identifying diffraction effects in measured reflectances

|

|

N. Holzschuch and R. Pacanowski

|

|

Eurographics Workshop on Material Appearance Modeling. June 2015.

|

|

There are two different physical models connecting the micro-geometry of a surface and its physical reflectance properties (BRDF). The first, Cook-Torrance, assumes

geometrical optics: light is reflected and masked by the micro-facets. In this model, the BRDF depends on the probability distribution of micro-facets normals. The second, Church-Takacs, assumes diffraction by the micro-geometry. In this model, the BRDF depends on the power spectral distribution of the surface height. Measured reflectance have been fitted to either model but results are not entirely satisfying. In this paper, we assume that both models are valid in BRDFs, but correspond to different areas in parametric space. We present a simple test to classify, locally, parts of the BRDF into the Cook-Torrance model or the diffraction model. The separation makes it easier to fit models to measured BRDFs.

|

|

Paper (Author's version)

|

|

|

|

BRDF Measurements and Analysis of Retroreflective Materials

|

|

L. Belcour, R. Pacanowski, M. Delahaie, A. Laville-Geay, L. Eupherte

|

|

Journal of Optical Society of America (JOSA A). Vol. 31, No. 12. December 2014.

|

|

We compare the performance of various analytical retroreflecting bidirectional reflectance distribution function (BRDF)

models to assess how they reproduce accurately measured data of retroreflecting materials.

We introduce a new parametrization, the back vector parametrization, to analyze retroreflecting data,

and we show that this parametrization better preserves the isotropy of data. Furthermore,

we update existing BRDF models to improve the representation of retroreflective data.

|

|

Paper (Author's version)

|

|

Material Measurements will be available soon for download.

|

|

|

|

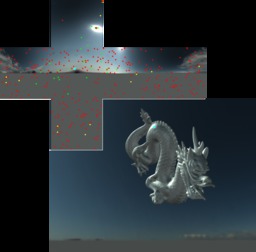

Position-dependent Importance Sampling of Light Field Luminaires

|

| H. Lu, R. Pacanowski and X. Granier |

|

Transaction on Visualization and Graphics. Vol. 21. No 2. Sept. 2014.

|

|

The possibility to use real world light sources (aka luminaires) for

synthesizing images greatly contributes to their physical realism.

Among existing models, the ones based on light fields are attractive

due to their ability to represent faithfully the near-field and

due to their possibility of being directly acquired.

In this paper, we introduce a dynamic sampling strategy for complex

light field luminaires with the corresponding unbiased estimator.

The sampling strategy is adapted, for each 3D scene position and each

frame, by restricting the sampling domain dynamically and by balancing

the number of samples between the different components of the representation.

This is achieved efficiently

by simple position-dependent affine transformations and restrictions of Cumulative

Distributive Functions that ensure that every generated sample conveys

energy and contributes to the final result.

Therefore, our approach only requires a low number of samples to

achieve almost converged results.

We demonstrate the efficiency of our approach on modern hardware by introducing a GPU-based

implementation.

Combined with a fast shadow algorithm, our solution exhibits interactive

frame rates for direct lighting for large measured luminaires.

|

| Paper(Author's version) |

VIDEO |

Supplemental Material (Author's version)

|

|

|

|

ALTA: A BRDF Analysis Library

|

| L. Belcour, P. Barla and R. Pacanowski |

| Workshop on Material Appearance Modeling: Issues and Acquisition. EGSR 2014. Lyon. |

|

In this document, we introduce ALTA, a

cross platform generic open-source library for Bidirectional Reflectance Distribution Function (BRDF) analysis.

Among others, ALTA permits to estimate BRDF models parameters from measured data,

to perform statistical analysis and also to export BRDF data models in a wide variety of formats.

|

| PAPER Author's version |

Slides of the presentation |

|

|

|

Optimizing BRDF Orientationsfor the Manipulation of Anisotropic Highlights

|

| B. Raymond, G. Guennebaud, P. Barla, R. Pacanowski and X. Granier |

| Presented at Eurographics 2014. Computer Graphics Forum. Vol. 33. No. 2. May 2014. |

|

This paper introduces a system for the direct editing of highlights produced by anisotropic BRDFs, which we call anisotropic highlights. We first provide a comprehensive analysis of the link between the direction of anisotropy and the shape of highlight curves for arbitrary object surfaces. The gained insights provide the required ingredients to infer BRDF orientations from a prescribed highlight tangent field. This amounts to a non-linear optimization problem, which is solved at interactive framerates during manipulation. Taking inspiration from sculpting soft- ware, we provide tools that give the impression of manipulating highlight curves while actually modifying their tangents. Our solver produces desired highlight shapes for a host of lighting environments and anisotropic BRDFs.

|

| PAPER Author's version |

Video |

| Scene Files for Global Illumination (e.g., Figure 14) |

|

|

|

Second-Order Approximation for Variance Reduction in Multiple Importance Sampling

|

| H. Lu, R. Pacanowski and X. Granier |

| Pacific Graphics 2013. Computer Graphics Forum. Vol. 32, No. 7. Oct. 2013. |

|

Monte Carlo Techniques are widely used in Computer Graphics to generate realistic images. Multiple Importance Sampling reduces the impact of choosing a dedicated strategy by balancing the number of samples between different strategies. However, an automatic choice of the optimal balancing remains a difficult problem. Without any scene characteristics knowledge, the default choice is to select the same number of samples from different strategies and to use them with heuristic techniques (e.g., balance, power or maximum). In this paper, we introduce a second-order approximation of variance for balance heuristic. Based on this approximation, we introduce an automatic distribution of samples for direct lighting without any prior knowledge of the scene characteristics. We demonstrate that for all our test scenes (with different types of materials, light sources and visibility complexity), our method actually reduces variance in average. We also propose an implementation with low overhead for offline and GPU applications. We hope that this approach will help developing new balancing strategies.

|

| PAPER Author's version |

| Video |

Supplemental Results |

|

Presentation Slides

|

|

|

| Real-Time Importance Sampling of Dynamic Environment Maps |

| H. Lu, R. Pacanowski and X. Granier |

| Eurographics 2013. Computer Graphics Forum. Proceedings of Short Papers. |

|

We introduce a simple and effective technique for light-based

importance sampling of dynamic environment maps based on the

formalism of Multiple Importance Sampling (MIS).

The core idea is to balance per pixel the number of samples

selected on each cube map face according to a quick and conservative

evaluation of the lighting contribution: this increases the number

of effective samples.

In order to be suitable for dynamically generated or captured HDR

environment maps, everything is computed on-line for each frame

without any global preprocessing.

Our results illustrate that the low number of required samples

combined with a full-GPU implementation lead to real-time

performance with improved visual quality.

Finally, we illustrate that our MIS formalism can be easily extended to

other strategies such as BRDF importance sampling.

|

|

Bibtex from HAL

|

Minimal BibTeX

|

PAPER Author's version |

| Supplemental Results |

Presentation (without videos) |

|

Presentation as a Movie including videos (large file)

|

|

|

| Rational BRDF |

| R. Pacanowski, O. Salazar Celis, C. Schlick, X. Granier, P. Poulin, A. Cuyt. |

| Transaction on Visualization and Computer Graphics. Nov. 2012 (vol. 18 no. 11). |

|

Over the last two decades, much effort has been devoted to accurately measure Bidirectional Reflectance Distribution Functions (BRDFs) of

real-world materials and to use efficiently the resulting data for rendering. Because of their large size, it is difficult to use directly measured

BRDFs for real-time applications, and fitting the most sophisticated analytical BRDF models is still a complex task. In this paper, we introduce

Rational BRDF, a general-purpose and efficient representation for arbitrary BRDFs, based on Rational Functions (RFs). Using an adapted

parametrization we demonstrate how Rational BRDFs offer (1) a more compact and efficient representation using low-degree RFs, (2) an

accurate fitting of measured materials with guaranteed control of the residual error, and (3) an efficient importance sampling by applying the

same fitting process to determine the inverse of the Cumulative Distribution Function (CDF) generated from the BRDF for use in Monte-Carlo

rendering.

|

| Editor's Version (with current BibTeX) |

|

PAPER Author's version |

| Supplemental Material |

Supplemental Results |

An

Addendum on fitting |

|

Slides from the I3D presentation

|

Early talk on RF fitting technique

|

| Fitting Data |

|

|

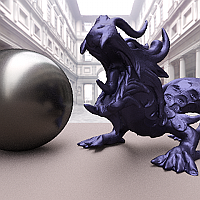

| Improving Shape Depiction under Arbitrary Rendering |

| R. Vergne, R. Pacanowski, P. Barla, X. Granier, C.Schlick. |

| IEEE. Transaction on Visualization and Computer Graphics. December 2010. |

|

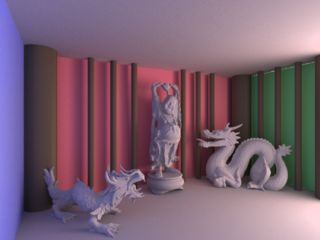

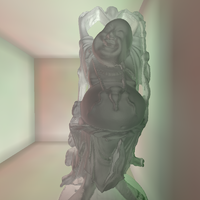

Based on the observation that shading conveys shape information

through intensity gradients, we present a new technique called

Radiance Scaling that modifies the classical shading equations to

offer versatile shape depiction functionalities. It works by

scaling reflected light intensities depending on both

surface curvature and material characteristics. As a result, diffuse

shading or highlight variations become correlated to surface feature variations,

enhancing concavities and convexities. The first advantage of such

an approach is that it produces satisfying results with any kind of

material for direct and global illumination: we demonstrate

results obtained with Phong and Ashikmin-Shirley BRDFs, Cartoon shading,

sub-Lambertian materials, perfectly reflective or refractive

objects. Another advantage is that there is no restriction to the

choice of lighting environment: it works with a single light, area

lights, and inter-reflections. Third, it may be adapted to enhance surface

shape through the use of precomputed radiance data such as Ambient

Occlusion, Prefiltered Environment Maps or Lit Spheres. Finally, our

approach works in real-time on modern graphics hardware making it

suitable for any interactive 3D visualization.

|

| PAPER (author version) |

BibTeX |

|

|

| Volumetric Vector-based Representation for Indirect Illumination Caching. |

| R.Pacanowski, X. Granier, C.Schlick, P.Poulin. |

| J. Computer Science and Technology. Issue 5. Vol 25. September 2010. |

|

This paper introduces a caching technique based on a volumetric

representation that captures low-frequency indirect illumination.

This structure is intended for efficient storage and manipulation of

illumination. It is based on a 3D grid that stores a fixed set of irradiance

vectors. During preprocessing, this representation can be built

using almost any existing global illumination

software. During rendering, the indirect

illumination within a voxel is interpolated from its associated

irradiance vectors, and is used as

additional local light sources.

Compared with other techniques, the 3D vector-based

representation of our technique offers increased robustness against local

geometric variations of a scene.

We thus demonstrate that it may

be employed as an efficient and high-quality caching data

structure for bidirectional rendering techniques such as particle

tracing or Photon Mapping.

|

| PAPER |

BibTeX |

|

|

| Radiance Scaling for Versatile Surface Enhancement |

| Romain Vergne, Romain Pacanowski, Pascal Barla, Xavier Granier, Christophe Schlick |

| I3D '10: Proc. symposium on Interactive 3D graphics and games - 2010. BEST PAPER HONORABLE MENTION. |

|

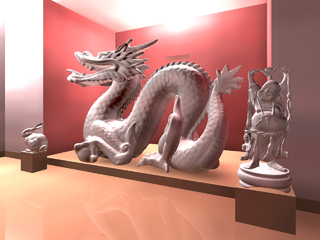

We present a novel technique called Radiance Scaling for the depiction of surface shape through shading.

It adjusts reflected light intensities in a way dependent on both surface curvature and material characteristics.

As a result, diffuse shading or highlight variations become correlated to surface feature variations, enhancing surface

concavities and convexities. This approach is more versatile compared to previous methods.

First, it produces satisfying results with any kind of material:

we demonstrate results obtained with Phong and Ashikmin BRDFs, Cartoon shading, sub-Lambertian materials,

and perfectly reflective or refractive objects. Second, it imposes no restriction on lighting environment: it does not require a

dense sampling of lighting directions and works even with a single light. Third, it

makes it possible to enhance surface shape through the use of precomputed radiance data

such as Ambient Occlusion, Prefiltered Environment Maps or Lit Spheres. Our novel approach works in real-time on modern graphics hardware and

is faster than previous techniques.

|

| PAPER

|

BibTeX |

|

VIDEO STREAM

|

Interactive Presentation in Flash

|

|

|

| Flat Bidirectional Texture Functions |

| Julien Hadim, Romain Pacanowski, Xavier Granier, Christophe Schlick |

| Technical Report RR-7144, INRIA Bordeaux - Sud Ouest, Number RR-7144 - 2009 |

|

Highly-realistic materials in computer graphics are computationally and memory demanding. Currently, the most versatiletechniques are based on Bidirectional Texture Functions (BTFs), animage-based approximation of appearance. Extremely realistic images may be quickly obtained with BTFs at the price of a huge amount of data. Even though a lot of BTF compression schemes have been introduced during the last years,

the main remaining challenge arises from the fact that a BTF embeds many different optical phenomena generated by the underlying meso-geometry (parallax effects, masking, shadow casting, inter-reflections, etc.).

We introduce a new representation for BTFs that isolates parallax effects. On one hand, we built a flattened BTF according to a global spatial parameterization of the underlying meso-geometry.

On the other hand, we generate a set of view-dependent indirection maps on this spatial parameterization to encode all the parallax effects.

We further analyze this representation on a various set of synthetic BTF data to show its benefits on view-dependent coherency, and to find the best sampling strategy.

We also demonstrate that this representation is well suited for hardware acceleration on current GPUs.

|

| PAPER |

BibTeX |

|

|

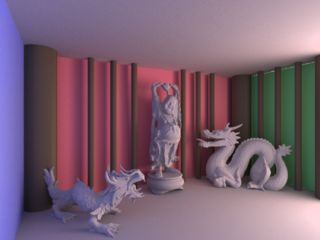

| Light Warping for Enhanced Surface Depiction |

| Romain Vergne, Romain Pacanowski, Pascal Barla, Xavier Granier, Christophe Schlick |

| ACM Transaction on Graphics (Proceedings of SIGGRAPH 2009) - 2009 |

|

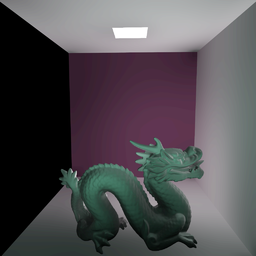

Recent research on the human visual system shows that our perception of object shape relies in part on compression and stretching of the reflected lighting environment onto its surface.

We use this property to enhance the shape depiction of 3D objects by locally warping the environment lighting around main surface features. Contrary to previous work,

which require specific illumination,

material characteristics and/or stylization choices, our approach en- hances surface shape without impairing the desired appearance. Thanks to our novel local shape descriptor, salient surface features

are explicitly extracted in a view-dependent fashion at var- ious scales without the need of any pre-process.

We demonstrate our system on a variety of rendering settings, using object materials ranging from diffuse to glossy, to mirror or refractive, with direct or global illumination,

and providing styles that range from photorealistic to non-photorealistic. The warping itself is very fast to compute on modern graphics hardware,

enabling real-time performance in direct illumination scenarios.

|

|

PAPER

|

BibTeX

|

| Supplemental Material |

Supplemental Results |

|

VIDEO

|

Interactive Presentation in Flash

|

|

|

| Volumetric Vector-Based Representation for Indirect Illumination Caching |

| Romain Pacanowski, Xavier Granier, Christophe Schlick, Pierre Poulin |

| Technical Report RR-6983, INRIA, Number RR-6983 - jul 2009 |

|

This report presents a volumetric representation that captures low-frequency indirect illumination,

structure intended for efficient storage and manipulation of illumination caching and on graphics hardware.

It is based on a 3D texture that stores a fixed set of irradiance vectors.

This texture is built during a preprocessing step by using almost any existing global illumination software.

Then during the rendering step, the indirect illumination within a voxel is interpolated from its associated irradiance vectors,

and is used by the fragment shader as additional local light sources.

The technique can thus be considered as following the same trend as ambient occlusion or precomputed radiance transfer techniques,

as it tries to include visual effects from global illumination into real-time rendering engines.

But its 3D vector-based representation offers additional robustness against local variations of geometric of a scene.

We also demonstrate that our technique may also be employed as an efficient and high quality caching data structure for bidirectional rendering techniques.

|

| PAPER |

BibTeX |

| VIDEO |

|

|

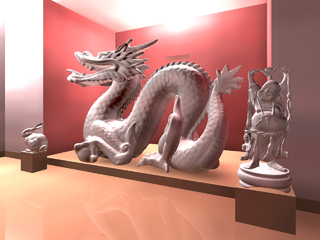

| Compact Structures for Interactive Global Illumination on Large Cultural Objects. |

| Romain Pacanowski, Mickaël Raynaud, Julien Lacoste, Xavier Granier, Patrick Reuter, Christophe Schlick, Pierre Poulin |

| International Symposium on Virtual Reality, Archaeology and Cultural Heritage: Shorts and Projects - Dec 2008 |

|

Cultural Heritage scenes usually consist of very large and detailed 3D objects

with high geometric complexity. Even the raw visualization of such 3D objects already

involves a large amount of memory and computation time. When trying to improve the sense of immersion and realism by using,

global illumination techniques the demand on these resources becomes prohibitive.

Our approach uses regular grids combined with a vector-based

representation to efficiently capture low-frequency indirect illumination.

A fixed set of irradiance vectors is stored in 3D textures (for complex

objects) and in 2D textures (for mostly planar objects). The vector-based

representation offers additional robustness against local variations of

the geometry. Consequently, the grid resolution can be set independently

of geometric complexity, and the memory footprint can therefore be

reduced. The irradiance vectors can be precomputed on a simplified

geometry.

For interactive rendering, we use an appearance-preserving

simplification of the geometry. The indirect illumination within a grid cell

is interpolated from its associated irradiance vectors, resulting in an

everywhere-smooth reconstruction.

|

| PAPER |

BibTeX |

| VIDEO 1 |

VIDEO 2 |

|

|

| Efficient Streaming of 3D Scenes with Complex Geometry and Complex Lighting. |

| Romain Pacanowski, Mickaël Raynaud, Xavier Granier, Patrick Reuter, Christophe Schlick, Pierre Poulin |

| Web3D '08: Proceedings of the 13th international symposium on 3D web technology, page 11--17 - 2008 |

|

Streaming data to efficiently render complex 3D scenes in presence of global illumination is still a challenging task. In this paper, we introduce a new data structure based

on a 3D grid of irradiance vectors to store the indirect illumination appearing on complex and detailed objects: the Irradiance Vector Grid (IVG).

This representation is independent of the geometric complexity and is suitable for quantization to different quantization schemes. Moreover, its streaming

over network involves only a small overhead compared to detailed geometry, and can be achieved independently of the geometry. Furthermore, it can be efficiently

rendered using modern graphics hardware.

We demonstrate our new data structure in a new remote 3D visualization system, that integrates indirect lighting streaming and progressive

transmission of the geometry, and study the impact of different strategies on data transfer.

|

| PAPER |

BibTeX |

| General VIDEO |

Streaming geometry |

Streaming lighting |

Interleaved streaming |

|

|

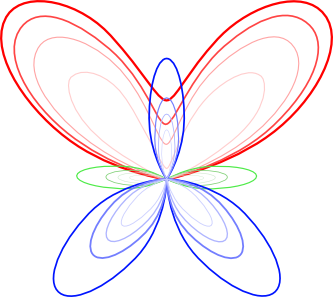

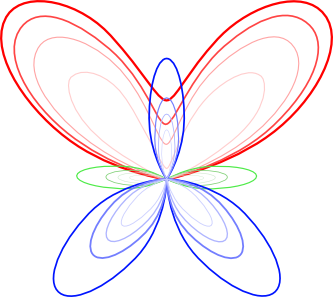

| Sketch and Paint-based Interface for Highlight Modeling |

| Romain Pacanowski, Xavier Granier, Christophe Schlick, Pierre Poulin |

| EUROGRAPHICS Workshop on Sketch-Based Interfaces and Modeling - jun 2008 |

|

In computer graphics, highlights capture much of the appearance of light reflection off a surface. They are generally limited to pre-defined models (e.g., Phong, Blinn) or to measured data.

In this paper, we introduce new tools and a corresponding highlight model to provide computer graphics artists a more expressive approach to design highlights.

For each defined light key-direction, the artist simply sketches and paints the main highlight features (shape, intensity, and color) on a plane oriented perpendicularly

to the reflected direction.

For other light-and-view configurations, our system smoothly blends the different user-defined highlights.

Based on GPU abilities, our solution allows real-time editing and feedback.

We illustrate our approach with a wide range of highlights, with complex shapes and varying colors. This solution also demonstrates the simplicity of introduced tools.

|

| PAPER |

BibTeX |

| Presented video |

Classical editing session |

Bitmap as lobe shape |

|

|

| Gestion de la complexité géométrique dans le calcul d'éclairement pour la présentation publique de scènes archéologiques complexes. |

| Romain Pacanowski, Xavier Granier, Christophe Schlick |

| Virtual Retrospect 2007 : Archéologie et Réalité Virtuelle - 2007 |

|

For cultural heritage, more and more 3D objects are acquired using 3D scanners [Levoy 2000].

The resulting objects are very detailed with a large visual richness but their geometric complexity requires specific methods to render them.

We first show how to simplify those objects using a low-resolution mesh with its associated normal maps [Boubekeur 2005] which encode details.

Using this representation, we show how to add global illumination with a grid-based and vector-based representation [Pacanowski 2005].

This grid capture efficiently low-frequency indirect illumination.

We use 3D textures (for large objects) and 2D textures (for quasi-planar objects) for storing a fixed set of irradiance vectors.

These grids are built during a preprocessing step by using almost any existing stochastic global illumination approach.

During the rendering step, the indirect illumination within a grid cell is interpolated from its associated irradiance vectors, resulting in a smooth everywhere representation.

Furthermore, The vector-based representation offers additional robustness against local variations of geometric properties of a scene.

|

| PAPER (in French) |

BibTeX |

PRESENTATION |

|

|

| Nouvelle représentation directionnelle pour l'éclairage global |

| Romain Pacanowski, Xavier Granier, Christophe Schlick |

| Actes des 18ièmes Journées de l'Association Française d'Informatique Graphique (AFIG) - November 2005 |

|

Dans cet article, nous introduisons une nouvelle représentation de la fonction d'éclairage première étape pour en obtenir une reconstruction volumique et multirésolution.

Notre représentation se veut robuste aux variations locales de la géométrie et de ses propriétés matérielles afin de permettre le plongement d'un objet dans une solution d'éclairage.

Nous montrons comment notre représentation peut être utilisée comme structure de cache pour l'éclairage indirect diffus dans le cadre de l'algorithme du tracé de rayon.

|

| PAPER (in French) |

BibTeX |

PRESENTATION |

|

|