2015 RESULTS

Task 1 - Fast linear algebra solver in sparse linear solvers.

Fast linear algebra solver in sparse linear solvers.

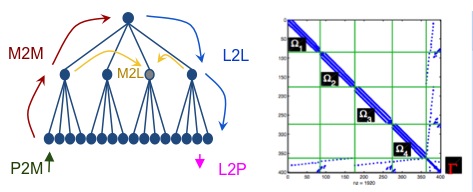

The main drawback of direct sparse linear solvers is their cost, both in terms of memory and number of operations. It explains why iterative or hybrid solvers are widely used, despite their possible lack of robustness, to solve large systems that would require too much time or memory with a direct approach. In order to reduce the costs of direct solvers, a recent strategy relies on hierarchical matrix decomposition to compress the information. If the resulting accuracy in inherently lower than a classical direct factorization, such a solution can be used as a “good” preconditioner for iterative methods, by providing an initial guess close to the exact solution. Those low-rank solvers are nowadays widely used for dense systems, leading to solvers that are acceptable to tackle many problems. Nevertheless, using such a strategy in the context of sparse matrices is more difficult, as long as data structures are more heterogeneous. In this work, we have presented a preliminary study on the use of ℋ-Matrix arithmetic in the context of PaStiX. The purpose was to conserve the parallelism of a supernodal solver while compressing some parts that correspond to operations representing the burden on scalability. For instance, in a classical 3D mesh problem, factorizing the last diagonal block is asymptotically as costly as the complete solver.In the context of the master thesis of Grégoire Pichon, we described a preliminary fast direct solver using HODLR library to compress large blocks appearing in the symbolic structure of the PaStiX sparse direct solver. We have presented our general strategy before analyzing the practical gains in terms of memory and floating point operations with respect to a theoretical study of the problem [Workshop on Fast Solvers, Toulouse, June 2015]. And we have also investigated ways to enhance the overall performance of the solver.

Moreover, among the preprocessing steps of a sparse direct solver, reordering and block symbolic factorization are two major steps to reach a suitable granularity for BLAS kernels efficiency and runtime management. When ordering separators, the widely used algorithm is the Reverse Cuthill-McKee algorithm. This strategy works with only a local view of a separator graph, considering a vertex and its neighbourhood. But we expect that an algorithmic approach with a complete knowledge of interactions within a separator will lead to a better ordering quality, and thus enhance PaStiX performance during the numerical steps. Grégoire Pichon (who has done his Master with us and will continue a PhD with us as well) studied and presented a reordering strategy to increase off-diagonal block sizes. It enhances BLAS kernels, allows to handle larger tasks, and reduce the factorization time in the PaStiX solver implemented over StarPU and Parsec runtimes [Sparse Days, Saint-Girons, June 2015]. We developed a distributed-memory library for computations with HSS matrices. Such matrices appear in many applications, e.g., finite element methods, boundary element methods, etc. Exploiting this structure allows for fast solution of linear systems and/or fast computation of matrix-vector products, which are the two main building blocks of matrix computations. The compression algorithm that we use, that computes the HSS form of an input dense matrix, relies on randomized sampling with a novel adaptive sampling mechanism. We investigated the parallelization of this algorithm, along with structured product, structured factorization and solution routines. The efficiency of the approach is demonstrated on large problems from different academic and industrial applications, on up to 8,000 cores. This work is part of a more global effort, the STRUMPACK (STRUctured Matrices PACKage) software package for computations with sparse and dense structured matrices. Hence, although useful on their own right, the routines also represent a step in the direction of a distributed-memory sparse solver.Casadei

We developed a sparse linear system solver that is based on a multifrontal variant of Gaussian elimination, and exploits low-rank approximation of the resulting dense frontal matrices. We use hierarchically semiseparable (HSS) matrices, which have low-rank off-diagonal blocks, to approximate the frontal matrices. For HSS matrix construction, a randomized sampling algorithm is used together with interpolative decompositions. The combination of the randomized compression with a fast ULV HSS factorization leads to a solver with lower computational complexity than the standard multifrontal method for many applications, resulting in speedups up to 7 fold for problems in our test suite. The implementation targets many-core systems by using task parallelism with dynamic runtime scheduling. Numerical experiments show performance improvements over state-of-the-art sparse direct solvers. The implementation achieves high performance and good scalability on a range of modern shared memory parallel systems, including the Intel Xeon Phi (MIC).

Task 2 - Improved parallelism for modern computers for heterogeneous many-cores.

Task 3 - Fast linear solvers for weak-hierarchical matrices

This can be, in some sense, considered an extension of the block incomplete LU (ILU) factorization. In a block ILU factorization, new fill-ins (i.e., blocks that are created during the elimination process which are originally zero) are ignored, and therefore, the block sparsity pattern of the matrix is preserved. In the proposed algorithm, instead, we use low-rank approximations to compress such new fill-ins. Using a tree structure, new fill-ins at the fine level are compressed and pushed to the parent (coarse) level. The elimination+compression process is done in a bottom-to-top traversal.

Therefore, the proposed algorithm has some formal similarities with algebraic multigrid (AMG) methods. However, the two methods differ in the way they build the coarse system, and use restriction and prolongation operators. In AMG, the original system is solved at different levels (from fine to coarse). Here, the compressed fill-ins – corresponding to the Schur complements – of each level are solved at the coarser level above. Note that the proposed algorithm is purely algebraic, similar to AMG. If the matrix comes from discretization of a PDE on a physical grid, the grid information can be exploited to improve the performance of the solver. This novel algorithm computes the LU factorization of sparse matrix using Gauss elimination. L and U matrices are calculated and stored with almost linear complexity with the size of the problem using hierarchical low-rank structures. The accuracy of the factorization, , can be determined a priori. The time and memory complexity of the factorization are O[n 2(1/)] and O( n 1/), respectively. The solver can be used as a stand-alone direct solve with tunable accuracy. The factorization part is completely separate from the solve part and is generally more expensive. This makes the algorithm appealing to be used as a black-box high accuracy preconditioner in conjunction with an iterative solver. We have implemented the algorithm in C++ (the code can be downloaded from http://bitbucket.org/hadip/lorasp), and benchmarked it as both a stand-alone solver, and a preconditioner in conjunction with the generalized minimum residual (GMRES) iterative solver.

Menu

Menu See also

See also Contact

Contact